|

Yang Zhou Hi, I'm a PhD student at the University of Toronto, supervised by Prof. Steven Waslander in the Toronto Robotics and AI Lab (TRAILab). Previously, I received my B.Eng. in Computer Science from Hunan University, working with Prof. Jianxin Lin. I’m broadly interested in autonomous driving and generative world models. Always open to chat or collaborate—feel free to reach out! Email / Google Scholar / Twitter / GitHub |

|

Research

I work at the intersection of computer vision and robotics, with a focus on

driving world models, video/trajectory generation, and motion planning.

|

|

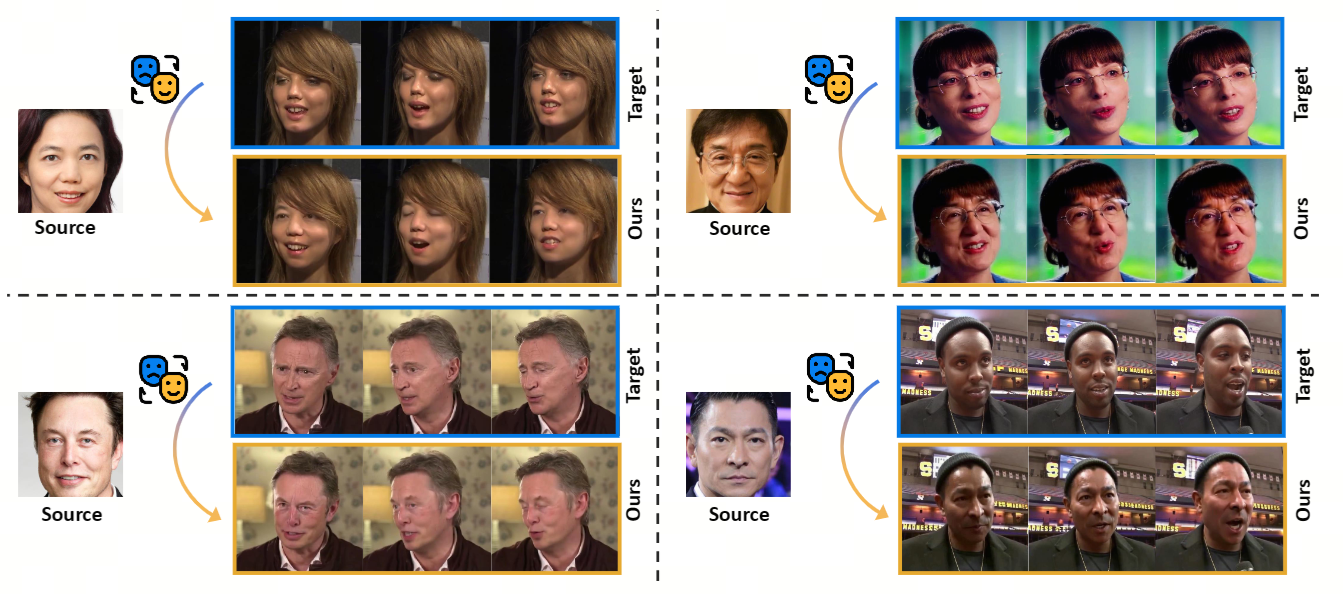

VividFace: A Robost and High-Fidelity Video Face Swapping Framework

Hao Shao, Shulun Wang, Yang Zhou, Guanglu Song, Dailan He, Zhuofan Zong, Shuo Qin, Yu Liu, Hongsheng Li NeurIPS, 2025 [Project Page] [Paper] [Code] We propose a diffusion-based framework for video face swapping, featuring hybrid training, an AIDT dataset, and 3D reconstruction for superior identity preservation and temporal consistency. |

|

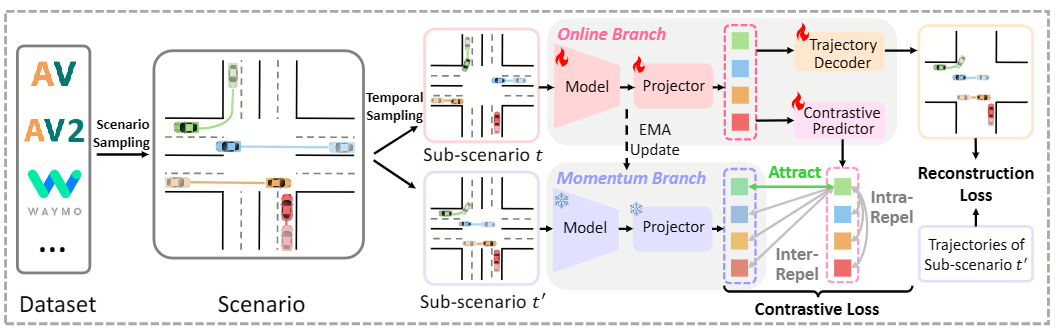

SmartPretrain: Model-Agnostic and Dataset-Agnostic Representation Learning for Motion Prediction

Yang Zhou*, Hao Shao*, Letian Wang*, Steven L. Waslander, Hongsheng Li, Yu Liu ICLR, 2025 [Paper] [Code] We propose SmartPretrain, a general and scalable self-supervised learning framework for motion prediction, designed to be both model-agnostic and dataset-agnostic. |

|

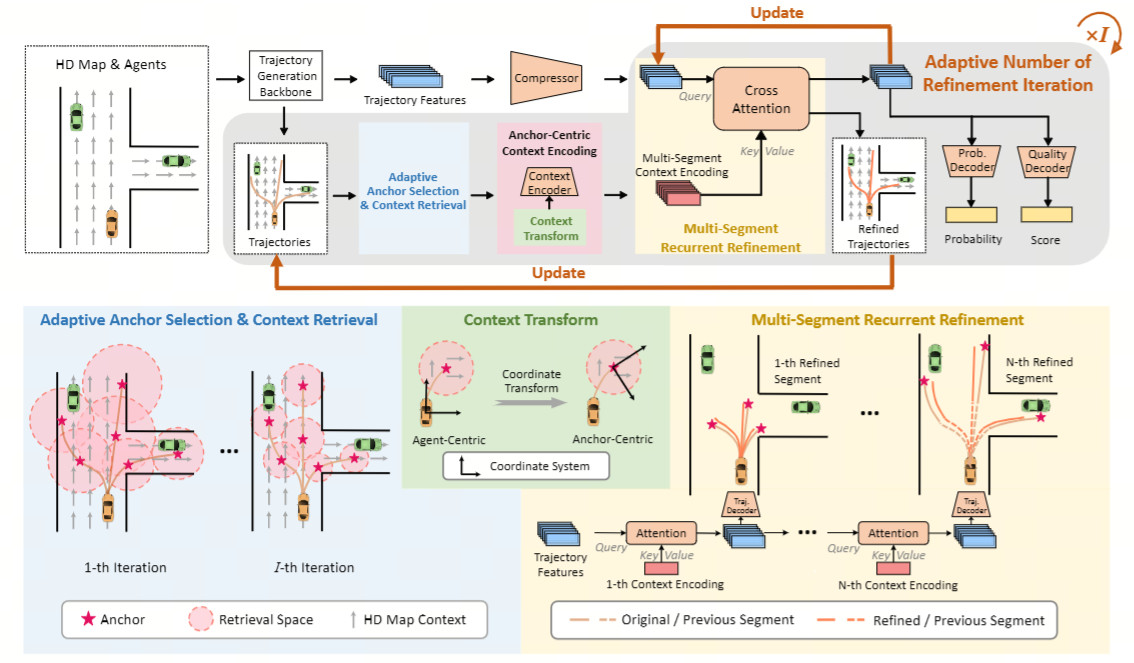

SmartRefine: A Scenario-Adaptive Refinement Framework for Efficient Motion Prediction

Yang Zhou*, Hao Shao*, Letian Wang, Steven L. Waslander, Hongsheng Li, Yu Liu CVPR, 2024 [Paper] [Code] We introduce a scenario-adaptive multi-round refinement strategy that boosts trajectory prediction with minimal extra compute. |

|

Template inspired by Jon Barron. |